AI coding tools promise unprecedented speed: prompt for a feature, receive complete implementations in minutes, ship faster than ever. The speed is compelling. Why spend hours writing a feature when AI can draft it in minutes? Because speed without validation creates more problems than it solves.

The Hidden Cost of AI-Generated Code

You prompt AI to build a feature. It generates 200 lines of code instantly. Now you face multiple validation problems simultaneously:

Functional uncertainty. You don’t know if the code works. Does it compile? Does it execute without errors? Does it produce correct results?

Requirement misalignment. AI interpreted your prompt, but that interpretation might be wrong. The code might solve a different problem than you intended.

Edge case gaps. You don’t know if it handles boundary values, error conditions, or unusual inputs correctly.

Design quality concerns. Poor coupling, unclear structure, or tight dependencies might make future changes unnecessarily difficult.

You’re evaluating all of this in code you didn’t write, without a systematic validation process.

The typical response is to skim the implementation, test obvious cases, and hope you caught important issues. Sometimes you do. Often you don’t. Edge cases fail in production. Performance degrades under load. The structure makes the next feature more complex to add. You spend more time debugging generated code than you saved generating it. The speed advantage disappears.

Why AI Cannot Validate Its Own Code

The obvious response is to ask AI to validate what it generated. This approach fails because AI validates against its own interpretation, not your actual requirements.

You prompt AI to build feature X. AI interprets this as feature Y (a misinterpretation). AI implements Y. When you ask AI to validate, it checks whether it successfully did what it understood, confirms yes, and reports success. The validation loop is closed. If AI misunderstood initially, asking it to validate just confirms it successfully built the wrong thing.

You need validation independent of AI’s interpretation. Something that verifies the code does what you actually need.

Why Detailed Prompts Don’t Solve This Problem

Teams try specifying requirements and implementation guidelines more precisely. Write perfect prompts with every detail, get perfect code.

This fails because you can’t specify everything upfront. Requirements and design clarify through building. Edge cases reveal themselves during implementation. You discover what you actually need by working through problems incrementally.

The movement toward massive upfront specifications and detailed user stories that AI converts into code takes teams back to phased development with big upfront requirements documents and design. In the early 2000s, the industry started to move away from this approach because the cycle of discovering what customers thought they wanted doesn’t meet their needs or deliver value was too costly and time consuming. AI doesn’t solve this fundamental problem.

Even with perfect specifications, you still receive hundreds of lines of generated code. You still must verify it works, meets customer needs, and has good design simultaneously. You still lack systematic validation.

Neither Detailed prompts nor AI self validation solve the fundamental problem. You need a process that validates independently of AI’s interpretation, guides design explicitly, and catches problems before they compound. Test-Driven Development provides this.

Understanding Test-Driven Development with AI

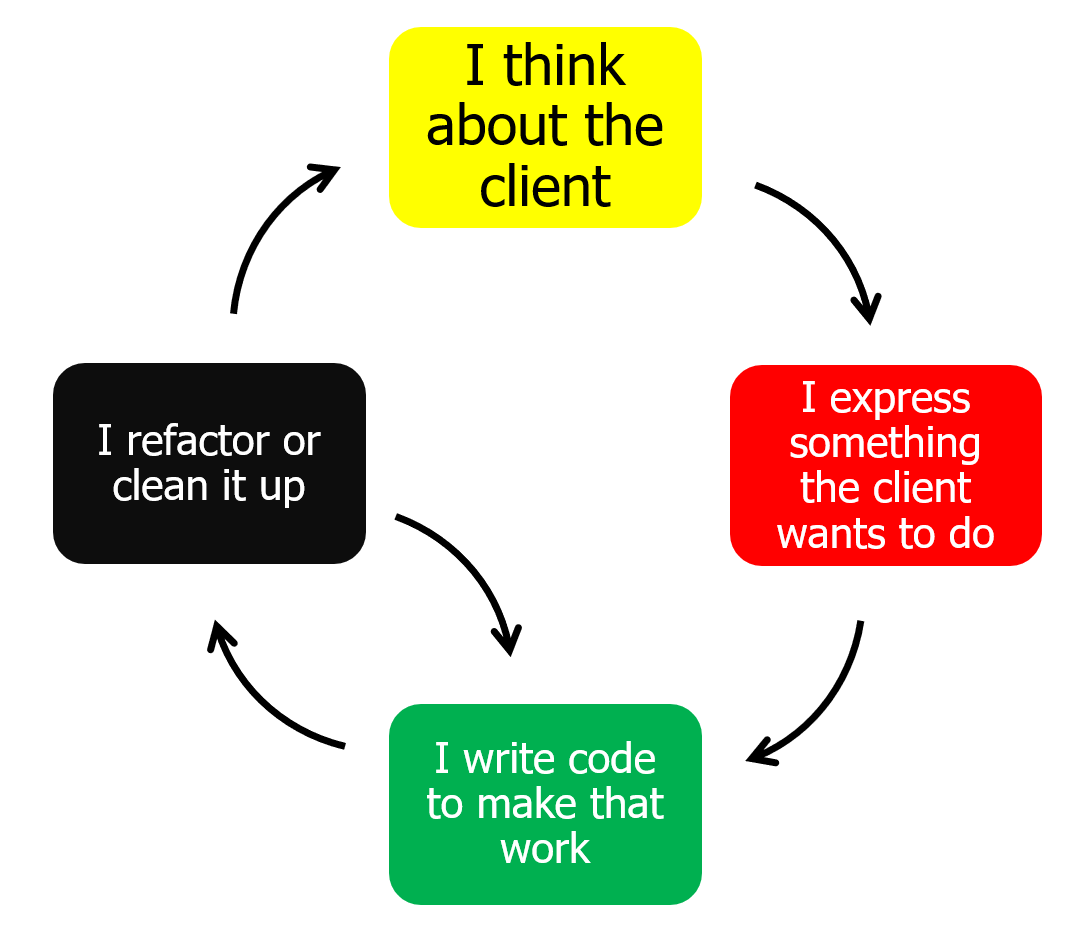

Test-Driven Development and behavioral driven development is a customer-centric design and development technique that follows the Red-Green-Refactor cycle: write a failing test that represents a client need (user or system), make it pass, improve the structure.

Writing the test first forces design decisions before implementation exists. What’s the interface? How will this be called? What are the inputs and outputs? What behavior do we need? You’re designing from the perspective of how the code will be used, not how it will be implemented. This produces cleaner, more usable interfaces.

The refactor step is where most design work happens. After getting a test to pass, you improve the structure. You remove duplication, clarify names, extract methods, improve organization. Tests keep you safe while you make these changes.

This incremental and iterative approach prevents quality problems before they happen. Poor coupling, unnecessary complexity, unclear structure, etc. These get addressed continuously through refactoring, not caught later in review.

With AI, this discipline becomes more critical, not less. You’re not writing the implementation, so the test-first approach is your only mechanism for ensuring AI builds what you actually need. The refactoring step is your only systematic opportunity to guide the design instead of accepting whatever structure AI generates.

How TDD Changes the AI Code Generation Workflow

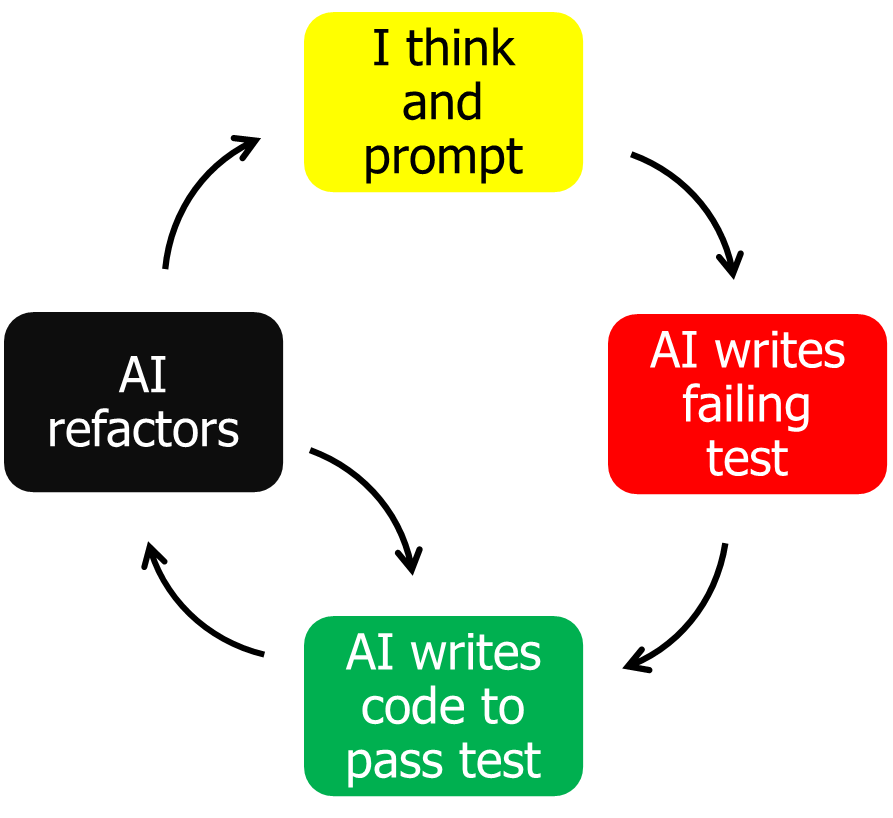

The same Red-Green-Refactor cycle applies when using AI for code generation, but AI handles the mechanical work while you maintain the discipline.

You think about the requirement or customer need. You prompt AI to write a test for the next small behavior that meets the requirement. The test fails because no implementation exists yet. You review the test to verify it captures requirements correctly and fix it if needed. Then you prompt AI to write implementation to pass the test. When the test passes, you have automatic validation of functional correctness. You review the implementation to decide if refactoring is needed. If yes, you prompt AI to refactor while tests ensure nothing breaks. Then you repeat for the next small piece of behavior.

Traditionally, developers cycle through red-green-refactor 30 to 40 times, taking small steps. With AI, the loop runs twice as fast. You think and prompt, and AI completes the loop. The steps are larger, just like an experienced developer who can see ahead for obvious functionality and take larger steps, backtracking to smaller steps for less obvious work.

AI makes the cycle faster, but the discipline remains the same.

The Critical Review Points in AI-Driven TDD

Reviewing the Test Validates Requirements and Design

When you review the test AI generated, you’re checking two things.

First, requirement accuracy. Did AI understand the requirements correctly? Does this test specify the right behavior?

Second, design quality. Are the design decisions good? Is this a reasonable interface? Are we asking for the right inputs and outputs?

Tests are small and focused on behavior without implementation details. This makes them significantly easier to review than implementation code. A test might be 5-10 lines. The implementation might be 50-200 lines.

More importantly, reviewing the test catches misunderstandings before implementation exists. If the test is wrong, you fix it immediately. You don’t waste time reviewing implementation built to satisfy wrong requirements.

Reviewing the Implementation Improves the Design

Once the test passes, you know the code works functionally. Your implementation review has a specific purpose: does this need refactoring?

The test-first discipline and continuous refactoring prevent quality problems from occurring. You’re deciding whether the current structure is good enough or should be improved now.

If refactoring is needed, AI does the mechanical work. Tests provide the safety net. If refactoring breaks something, tests fail immediately.

Why This Approach Solves the Validation Problems

This approach solves the validation problems AI creates:

Independent validation. Because you review the test separately, you verify AI understood correctly before implementation happens. Tests then provide automatic validation that implementation matches requirements. This solves the requirement misalignment problem where AI’s interpretation might be wrong.

Explicit design control. Test-first forces good design decisions incrementally. You’re thinking about interfaces and behavior before implementation. You decide what to build next through test selection and guide design through refactoring decisions. This solves the design quality problem where you’d otherwise accept whatever structure AI generates.

Immediate feedback. Tests tell you instantly when something breaks. You’re never far from working code. Problems are caught in seconds, not days. This solves the functional uncertainty problem where you don’t know if generated code actually works.

Continuous quality. The refactor step addresses coupling, complexity, and structure continuously. Quality issues are prevented or fixed immediately, not discovered weeks later.

The Speed Objection: Why TDD With AI Is Faster, Not Slower

TDD with AI sounds slower than generating complete features and fixing problems later, but it isn’t.

When you generate code without tests, you spend time:

- Reading hundreds of lines of generated code to understand what it does

- Testing multiple scenarios to verify behavior

- Debugging when edge cases fail

- Refactoring when the structure makes new features difficult

- Documenting what the code actually does

When you use TDD with AI, you spend time:

- Writing or reviewing small tests that specify behavior

- Reviewing small implementations that pass those tests

- Refactoring with a safety net that catches breaks instantly

The second workflow front-loads design work and validation. The first workflow back-loads it and does it under pressure when code is already in production or blocking other work.

TDD with AI feels slower because you’re solving problems before they exist. It’s faster because you’re not debugging code you don’t understand or untangling structure you didn’t design.

Why Teams Without TDD Struggle With AI Code Generation

Using AI without Test-Driven Development means:

- Prompting for complete features

- Receiving complete implementations

- Reviewing for correctness, design quality, and functional requirements simultaneously

- No incremental validation as you build

- No safety net for changes

- No captured knowledge of requirements

- Design decisions made implicitly by AI, not explicitly by you

You generate code fast but have no systematic way to ensure it’s correct or well-designed until you test it or it reaches production.

Teams that adopt TDD with AI get implementation speed with design discipline. The test-first approach provides validation and guides design. Refactoring keeps code clean. Each step is small and verified.

The Real Advantage of Combining TDD and AI

AI doesn’t eliminate the need for good software development practices. It makes those practices more valuable.

As agile engineering practices evolve in the age of AI, the discipline that produced quality code before AI produces quality code with AI: iterative and incremental development, test-first thinking, continuous refactoring, explicit design decisions. AI amplifies both good and bad practices. Without discipline, teams generate bad code faster and accumulate technical debt that eventually slows delivery.

The difference is speed. AI handles implementation and refactoring faster than humans can type. But the discipline of writing tests first, making deliberate design decisions, and improving structure continuously makes the speed useful instead of dangerous.

TDD with AI isn’t about AI writing tests for validation. It’s about humans maintaining design discipline while AI handles mechanical implementation work. You’re actively developing software through incremental, validated steps. AI accelerates the cycle, but you remain in control of what gets built and how it’s structured.

Teams using AI without TDD generate code fast and discover design problems late, when they’re expensive to fix. Teams using TDD with AI build fast with good design from the start. The difference compounds over months. Code built without discipline becomes harder to change, requiring more time to add features or fix bugs. Code built with discipline remains malleable, allowing teams to move faster as the codebase grows rather than moving slower.

The practices change. The human role evolves. But the principles remain true. Continuous attention to technical excellence and good design enhances agility, perhaps more than ever.

Learn AI Test-Driven Development

Understanding why TDD matters with AI is the first step. Learning how to effectively prompt AI to follow test-driven development practices, create comprehensive test suites, and maintain code quality while accelerating delivery requires hands-on practice.

Our AI Test-Driven Development Workshop teaches developers to maintain quality while maximizing AI acceleration. You’ll learn to guide AI through the Red-Green-Refactor cycle, review AI-generated tests and code effectively, and build applications that are both fast to write and reliable in production. Over 70% of the workshop is hands-on coding where you apply these techniques to real development scenarios.

Ready to transform how your team builds software with AI? Learn more about the workshop.